AI 2027-2029

AI 2027 - 2029

Recently, a major attempt was made to forecast what short-term ASI1 timelines might look like. It was published to ai-2027.com, an interactive website providing a hypothetical scenario that represents some of the authors' modal expectation2 .

Its predictions may be incredibly jarring or even downright silly to most people, but it also may come as a surprise that they are not far from the ordinary expectations of many people in the AI and forecasting circles.

- Dario Amodei, the founder and CEO of Anthropic recently said “we could have AI systems equivalent to a ‘country of geniuses in a datacenter’ as soon as 2026 or 2027” in his essay The Urgency of Interpretability.

- Sam Altman, the founder and CEO of OpenAI, said “it is possible that we will have superintelligence in a few thousand days” in his essay The Intelligence Age.

- In 1999, in his book The Age of Spiritual Machines, Ray Kurzweil predicted artificial general intelligence (AGI) in 2029, and beyond-radical changes to society in the years soon after, and he has stuck by this prediction throughout the last 26 years.

- Manifold, a non-monetary betting market that’s popular among communities interested in AI and tech forecasting, is currently at a 40% chance of superintelligence by 2030.

- Metaculus, a well-known forecasting platform where experts and amateurs make long-term predictions, has a median expectation of the arrival of AGI that recently fluctuated between 2030 and 2034.

Now, assuming you are sufficiently convinced that the authors of AI 2027 are not fully alone in their short timelines, let’s dive into their scenario and challenge some of their assumptions. They provide a frankly staggering level of research (relative to what’s possible to research for a topic like this) and reasoning to inform as much of their expectations about the near-future of AI progress as possible.

Summary

AI 2027 predicts slow (relative to what follows) progress in AI capabilities in 2025 and 2026. It is roughly in the same order of magnitude as the capability growth we’ve seen in 2023-2024. The main improvements discovered are semi-reliable AI agentic systems in 2025, and increasingly reliable agents in 2026, especially in the domain of coding and AI research. These can perform tasks such as browsing the internet for detailed research, creating somewhat complex features in code repositories, and operating a computer entirely on a user’s behalf (each with not-uncommon mistakes). The authors also predict that the governments of the USA and China slowly begin to wake up to the impending dramatic changes on the horizon, and the importance of them from a national security perspective.

In contrast to the predicted “slowness” of 2025-2026, as you might expect based on the title of the essay, 2027 is when they predict AI progress will start the “takeoff” scenario. Over the past several decades of discussion around AI capability progress, “intelligence takeoff” was coined to describe a scenario in which increasingly capable AIs are able to contribute to their predecessors’ capabilities, causing a positive feedback loop which accelerates much faster than one might intuitively expect.

By the end of 2027, in their scenario, one single instance of the top AI model is significantly more intelligent than any human that has ever existed, and there are hundreds of thousands of copies of it running in parallel.

Beyond the scope of this summary, it’s worth quickly clarifying what this might mean, for readers not familiar with the topic of superintelligent AI. Imagine 300,000 much-smarter-than-Einstein people working and thinking (100x faster than humans think) to solve disease, aging, scarcity, and any other human issue (or working to kill us all, but that’s a topic for another blog post). Many people predict that this scenario would cause unfathomable changes, perhaps the same level of technological change between hunter-gatherers and today, happening to us in a decade.

However, as with any long-term prediction, the assumptions and other inputs to your formula can drastically modify the end results (in this case, the timelines).

Analyzing Assumptions

The authors estimate that having a superhuman AI coder (but not yet a superhuman AI researcher, due to relatively worse “research taste”), would result in a 5x speedup compared to if only humans were involved. They arrive at this via stacked multipliers from various sources of improved efficiency in the research process due to superhuman AI coder. One of these multipliers (copied here from their Takeoff Forecast research) is:

Less waste: With SCs [superhuman coders], research teams can use the vast engineering labor to waste less compute.

- Before every experiment including small-scale experiments, SCs can red-team and bug-test it to reduce the probability that there is some bug which ruins the results.

- Monitor the experiments in real time, noticing and fixing some kinds of problems almost as soon as they happen (by contrast it’s common in frontier companies today for even medium-sized experiments to be running overnight with no one watching them). They can shut down the experiment early as soon as it’s clear that it worked or didn’t work

- Do the same experiments but with less compute e.g. via higher utilization

They guess that this results in a multiplier between 1.2x to 2x. I want to specifically highlight their second bullet point here, the idea of AIs monitoring, fixing, and shutting down the experiments early. I find this unconvincing for two main reasons. Firstly, I find it difficult to believe that the humans in charge in 2027 will be willing to provide this much power to AIs. Secondly, I doubt that a superhuman AI coder (which, by their definition, is not yet a superhuman AI researcher) would perform well enough at this job for humans to even want to autonomously employ them for it. However, the other two specific speedups listed make sense to me. As a result, I reduce their multiplier for less waste to be 1.1x to 1.5x, and we arrive at a hypothetical total progress speedup of 4x, rather than their 5x, for the superhuman AI coder.

Another instance of the authors, in my opinion, overestimating the trust that the AI lab will place in their AI agents is their assumption that the improvements in the agent’s capabilities will immediately be applied to the AI research. In their takeoff simulation code, they use timesteps of one single calendar day during the speedup calculation, which means that the improvements to the model on Tuesday will begin manifesting itself in a speedup on AI research on Wednesday. I am doubtful that the frontier AI labs, who regularly discuss fear of catastrophic misalignment, and whose internal staff often have very high values of “p(doom)”3 , will be willing to allow an AI that was trained yesterday (literally) to influence AI research today. I believe a time step of 30 days is more appropriate, which gives AI labs time to internally red team the new version of the model.

Compute Production and Capacity

The authors estimate 4 million H100-equivalent4 computing power (they refer to these as "H100e") existed globally at the end of 2023, but it seems like Epoch AI (their own source) estimated that number to be true at mid-2024. That pushes the authors’ total compute timeline back by 6 months. The authors expect compute production to grow 2.25x per year5. This amounts to ~7% growth per month.

However, it may be a mistake to assume 2.25x growth in compute capacity/production every year. ChatGPT o3 DeepResearch predicts a 3-5x increase in compute capacity over the next 2 years, and o3 normal predicts a 4x increase, in line with each other. This amounts to about a 6% MoM increase. Thus, assuming 6 million H100e in EOY 2024, and a 4x increase from then until EOY 2026, we arrive at 24 million H100e.

Assuming it slows down a bit further, perhaps to 5% per month in 20276 , we can expect 32 million H100e by mid 2027, instead of the 60 million that the authors predict.

At first, we might predict that this correction should delay the predicted advent of a superhuman coder AI, since, as we’ll see in the next section, training compute is one of the four major variables in AI progress speed. However, the authors’ actual two methods of forecasting the timing of superhuman coders do not involve their predicted amount of available compute.

Automated AI Researchers

In the Dwarkesh Podcast on April 3rd, he asks the authors, if the mental labor toward improving the software of AI models is so important, why aren’t the major labs increasing headcount as quickly as possible? Daniel Kokotajlo, the first author, responds by saying that they see four inputs into AI progress speed: number of researchers, researcher serial speed (relatively constant for humans), research taste (i.e. the quality of selected experiments), and training compute. He says that, by the time they’ve fully automated AI research, the main bottlenecks will be training compute and research taste. In other words, the reason for the 25x multiplier, rather than something higher, is lack of training compute and research taste. If that’s the case, then cutting our available GPUs almost in half (from their expectation), would mean that training compute would be even more of a bottleneck, and having those extra hundreds of thousands of AI researchers working at 40x serial speed does not have as much gain as it otherwise would.

This matches up with a recent quick exchange I had with Daniel:

Me: I was curious as to how you all came up with the "6% of compute will go to research automation?"

Daniel: It was just an educated guess! I'm lately thinking it should have been a smaller fraction probably, maybe more like 1%, to free up more compute for experiments.

They did not go into detail on answering Dwarkesh’s specific question (likely because he paired it immediately with another question/statement) about AI labs not hiring more humans today. However, I think it’s a very important string to pull on here, so let me humbly try to answer for them.

One way to look at it is “If OpenBrain wanted to hire an additional *really good* AI researcher today, the marginal cost is a yearly total compensation in the millions of dollars. That researcher might begin contributing strongly within a few weeks or months. Alternatively, hiring non-AI researchers, but very smart programmers and math/physics experts, would perhaps cost slightly less, but they might not begin contributing strongly for, say, 6-12 months. As most things in life, we need to look at things in terms of tradeoffs and opportunity cost. By allocating $3 million to pay Researcher #999 over the 2 years, OpenBrain could purchase 23 GB200 computers and get 6.53 x 1024 total INT8 FLOPs over that same timeframe (assuming a Model FLOP Utilization of 40%). Thus, rather than training ~5 more non-AI-researchers to become AI researchers, they would have enough FLOPs to recreate GPT-4o (slightly rounding down due to likely architecture improvements since the original GPT-4o) and more, since GPUs last longer than just 2 years of usefulness.

However, the marginal cost of adding a researcher goes down if the researcher is an AI. Using the authors’ unmodified numbers, if the leading AI lab uses 500,000 H100e to run 50,000 superintelligent AI researcher agents, then the hardware to run one AI researcher (which accomplishes tasks at 30x human speed) costs $150,000 upfront (assuming each H100e costs $15,000), and they can continue running it for years. This is about an order of magnitude less expensive than paying a top-tier human AI researcher for just one single year.

Running an Updated Takeoff Simulation

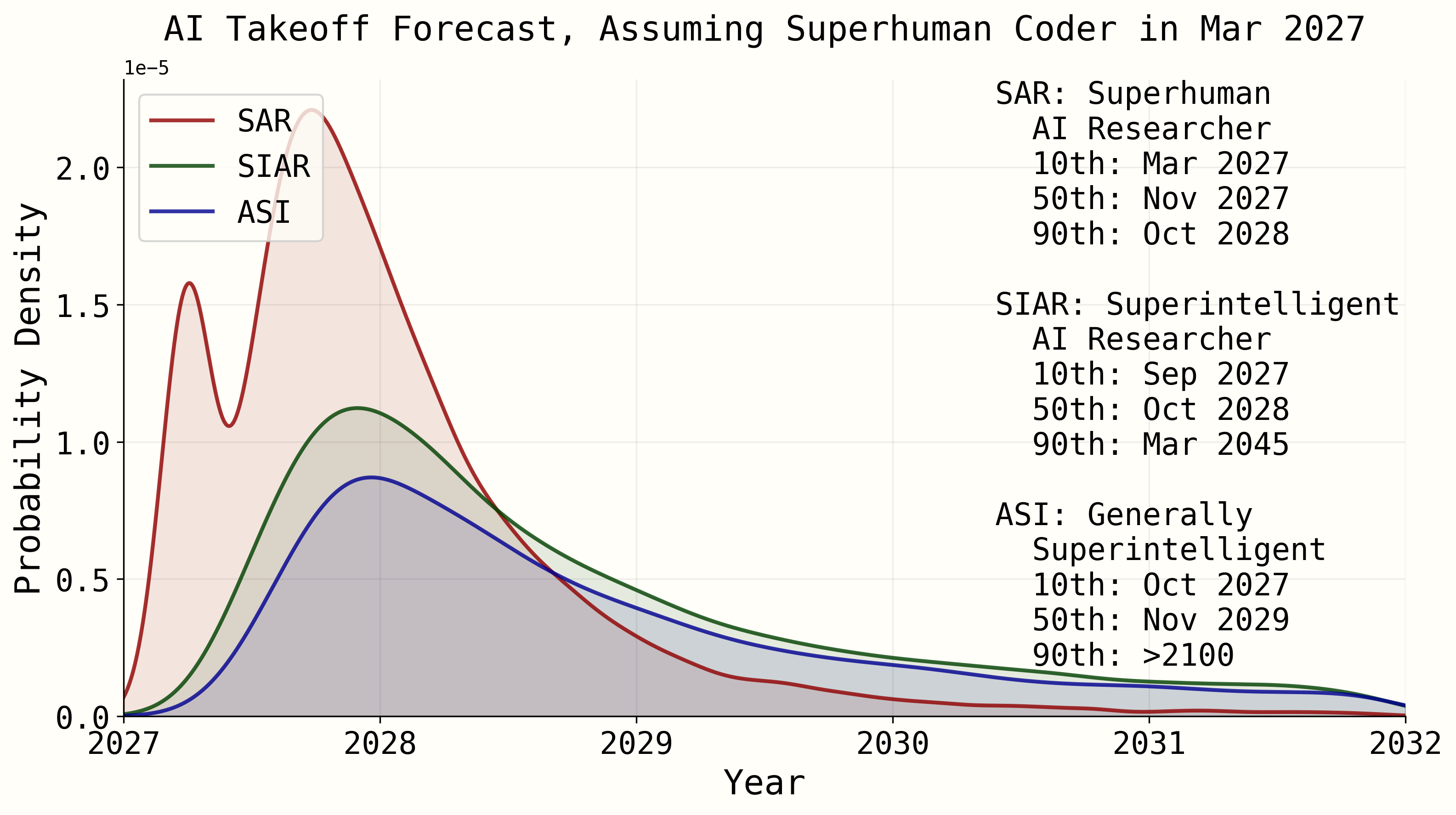

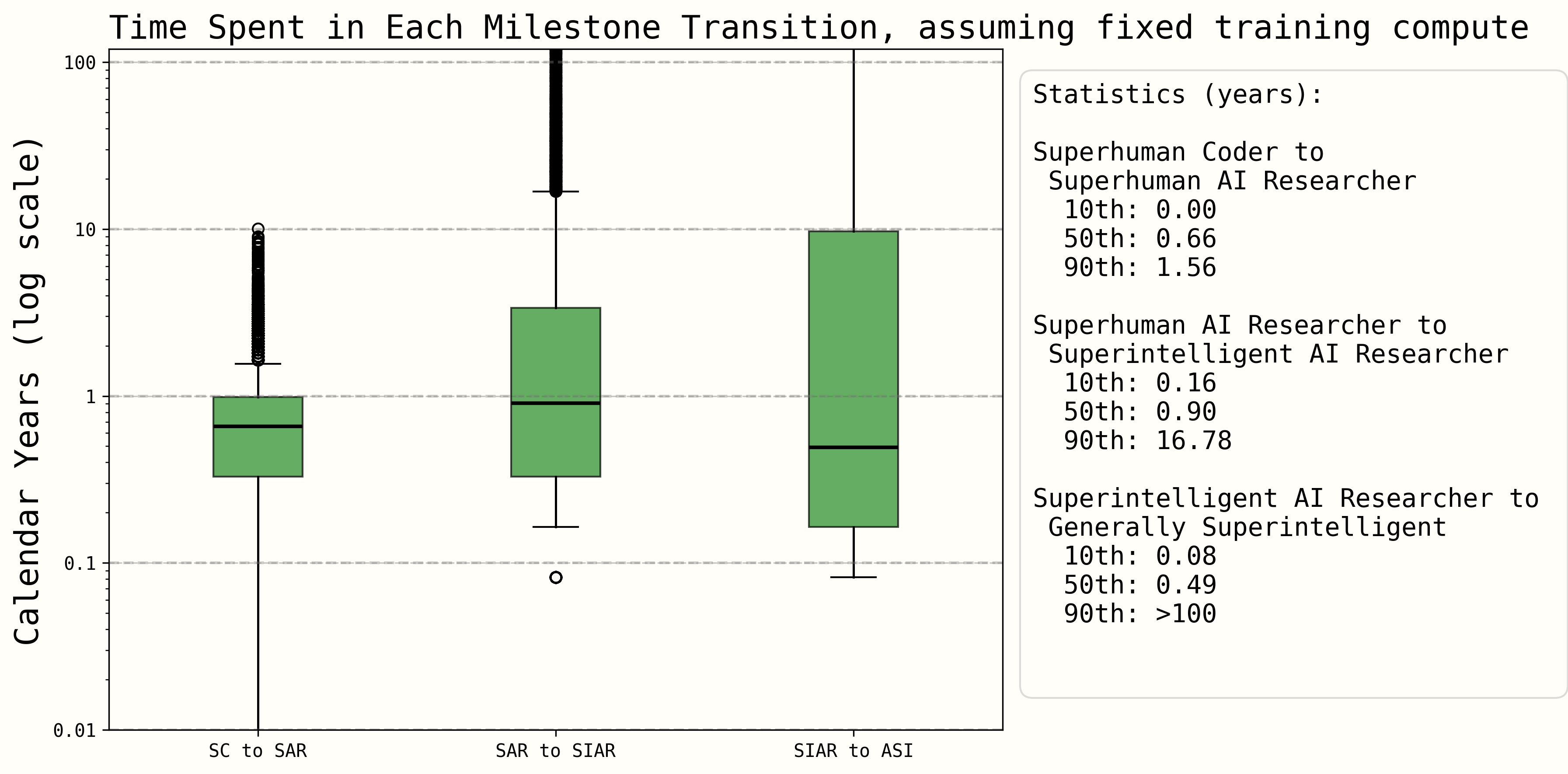

Taking our updated parameters from Analyzing Assumptions (4x speedup for superhuman coder, and 30-day timesteps), and an updated value of 10x for the superhuman AI researcher speedup (and 100x for superintelligent AI researcher and 800x for general superintelligence, using the same methodology as AI 2027) due to the reasoning in the Automated AI Researchers section, the authors’ takeoff simulation gives us updated estimates.

To highlight some differences we see compared to their estimate: the median expectation for the time between:

- The entire takeoff scenario (Superhuman coder -> General Superintelligence): 23 months, or almost 2 years (instead of 9.5 months in AI 2027)

- Superhuman Coder -> Superhuman AI Researcher: 7 months (instead of 4 months)

- Superhuman AI Researcher -> Superintelligent AI Researcher: 10 months (instead of 3.5 months)

- Superintelligent AI researcher -> General Superintelligence: 6 months (instead of 2 months)

Although this might not seem like much of a difference (2 years is still arguably unacceptably fast to go from humans being the smartest entities on Earth to computers leaving us in the dust [or as dust, if they are misaligned]), the updated numbers, if true, would give us over twice as long to: set up systems of human oversight, use the intermediate AIs to solve mechanistic interpretability or alignment, and/or have changes in major word leaders (I swear something important happens in January 2029, but I can’t quite place it).

It’s worth noting that this post is not an indictment of the authors of AI 2027. They clearly put much, much more thought, effort, and skill into their work than I’ve done here, and, most importantly, these takeoff timelines are well within their proposed confidence intervals. This post is exactly the opposite of an indictment, in fact, but more of my attempt at a minor contribution to their work because I find it so inspiring.